Terrafy Existing Azure Resources

One of the age old questions, when it comes to infrastructure as code, is what tool should I use? However, the question that follows is equally as important: How do I get my existing resources defined in that tool?

The Azure team over at Microsoft has recently released a new tool to answer that question for my favorite Iac tool, Terraform, in the form of Azure Terrafy.

Azure Terrafy

The Azure Terrafy project is a really cool, open-source project whose entire goal is taking your existing Azure resources and codifying them into immediately usable Terraform code. The process is pretty straightforward too. Run the aztfy binary in an empty directory then tell it which Azure Resource Group to use as a source. Aztfy will pull in all the different Azure objects and provide them in a list, where you can specify whether to codify and what the Terraform resource name should be. Afterwards, you have a fully functional set of Terraform configuration files which can be used to manage the specified Azure objects. Literally, you can even run terraform plan immediately afterwards to verify.

This probably sounds too good to be true. Well, in my testing, it’s spot on. However, there are some caveats. The system you’re running azterrfy from needs to have Azure CLI installed and authenticated. This is the backend authentication mechanism being used, and the only mechanism available. Another is that the workflow does not work with existing configurations. There’s an expectation that you’re running the command in an empty directory, and will warn you if you aren’t. The last caveat is a bit of a non-issue, but it will generate hardcoded depends_on statements. Terraform handles dependencies extremely well, however the generated code follows a very prescriptive step-by-step approach.

There are also some assumptions that I’m able to make based on the outputs. The first is that it will always generate resources defined to the latest version of the Azure RM provider. The generated files will actually pin the provider to the version used, so this isn’t a huge deal but is notable. The other assumption, the configurations are generated using Terraform version 1.1. This will generally mean that the configuration may or may not work on versions 1.0 or prior. In this case, the required Terraform version is not pinned.

To be very clear, none of those caveats or assumptions are show stoppers and should not stop you from trying it out. Speaking of, let’s walk through an example.

In Action

To start using the aztfy tool, we need to install it to our local system. Personally, I’m grabbing the binary from the releases area of the GitHub repository. Alternately, you could also install it from the Go toolchain with the following command: go install github.com/Azure/aztfy@latest

Once it’s installed locallly, we need to make sure Azure CLI is installed and authenticated to Azure.

I’m more interested in the quality of code created, so I’ll be taking an existing GitHub repository that I often use for demos and provision the resources. The demo is supposed to deploy a VM, along with the associated networking and security group settings, then builds out a web server on the VM through some remote-exec magic. The aztfy tool won’t be able to create the provisioning setup, so I’ve eliminated it from the linked branch.

For reference, the output from the setup code is as follows:

...

Apply complete! Resources: 8 added, 0 changed, 0 destroyed.

kruddy@mbp02 hashicat-azure % terraform state list

azurerm_linux_virtual_machine.catapp_vm

azurerm_network_interface.catapp_nic

azurerm_network_interface_security_group_association.catapp_nic_sg

azurerm_network_security_group.catapp_sg

azurerm_public_ip.catapp_pip

azurerm_resource_group.demo_rg

azurerm_subnet.subnet

azurerm_virtual_network.vnet

Let’s create a new directory and use the tool to generate the supporting Terraform code to recreate all of those resouces:

kruddy@mbp02 git % mkdir aztfy_hashicat

kruddy@mbp02 git % cd aztfy_hashicat

kruddy@mbp02 aztfy_hashicat % aztfy ruddy-aztfy-demo

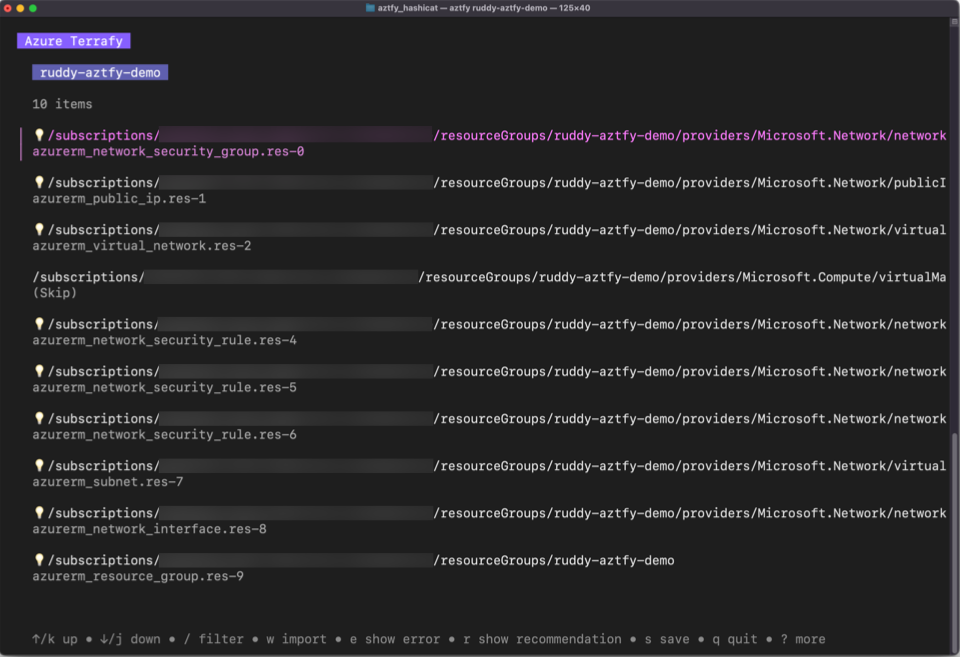

At this point it authenticates to Azure, reads all of the resources from the defined Resource Group, and displays a list of them where you can select which resources to codify and even the option to rename the Terraform resources.

I accepted the default, which included all of the resouces, and after a short wait was greeted with the following message:

Terraform state and the config are generated at: /Users/kruddy/Documents/git/aztfy_hashicat

kruddy@mbp02 aztfy_hashicat % ls

main.tf terraform.tfstate

provider.tf terraform.tfstate.backup

The provider.tf file contains the Terraform block and provider block:

terraform {

backend "local" {}

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "2.99.0"

}

}

}

provider "azurerm" {

features {}

}

The main.tf file contains definitions for 9 different resources. The following is a sample of the created code:

resource "azurerm_network_security_group" "res-0" {

location = "centralus"

name = "ruddy-sg"

resource_group_name = "ruddy-aztfy-demo"

depends_on = [

azurerm_resource_group.res-9,

]

}

resource "azurerm_network_security_rule" "res-4" {

access = "Allow"

destination_address_prefix = "*"

destination_port_range = "80"

direction = "Inbound"

name = "HTTP"

network_security_group_name = "ruddy-sg"

priority = 100

protocol = "Tcp"

resource_group_name = "ruddy-aztfy-demo"

source_address_prefix = "*"

source_port_range = "*"

depends_on = [

azurerm_network_security_group.res-0,

]

}

In hindsight, especially if you noticed it in the screenshot, the tool didn’t actually pickup the VM by default. I cleared the directory and ran it again. This time, I selected the VM and gave it a resource type and resource name: azurerm_linux_virtual_machine.vm

Re-checking the main.tf file, I found a 10th resource for the VM which is as follows:

resource "azurerm_linux_virtual_machine" "vm" {

admin_password = null # sensitive

admin_username = "hashicorp"

custom_data = null # sensitive

disable_password_authentication = false

location = "centralus"

name = "ruddy-webapp-vm"

network_interface_ids = ["/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo/providers/Microsoft.Network/networkInterfaces/ruddy-catapp-nic"]

resource_group_name = "ruddy-aztfy-demo"

size = "Standard_A0"

os_disk {

caching = "ReadWrite"

storage_account_type = "Standard_LRS"

}

source_image_reference {

offer = "UbuntuServer"

publisher = "Canonical"

sku = "16.04-LTS"

version = "latest"

}

depends_on = [

azurerm_network_interface.res-8,

]

}

Now for the real test, let’s check to see what Terraform thinks of this code:

kruddy@mbp02 aztfy_hashicat % terraform plan

azurerm_resource_group.res-9: Refreshing state... [id=/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo]

azurerm_public_ip.res-1: Refreshing state... [id=/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo/providers/Microsoft.Network/publicIPAddresses/ruddy-ip]

azurerm_virtual_network.res-2: Refreshing state... [id=/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo/providers/Microsoft.Network/virtualNetworks/ruddy-vnet]

azurerm_network_security_group.res-0: Refreshing state... [id=/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo/providers/Microsoft.Network/networkSecurityGroups/ruddy-sg]

azurerm_network_security_rule.res-6: Refreshing state... [id=/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo/providers/Microsoft.Network/networkSecurityGroups/ruddy-sg/securityRules/SSH]

azurerm_network_security_rule.res-4: Refreshing state... [id=/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo/providers/Microsoft.Network/networkSecurityGroups/ruddy-sg/securityRules/HTTP]

azurerm_network_security_rule.res-5: Refreshing state... [id=/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo/providers/Microsoft.Network/networkSecurityGroups/ruddy-sg/securityRules/HTTPS]

azurerm_subnet.res-7: Refreshing state... [id=/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo/providers/Microsoft.Network/virtualNetworks/ruddy-vnet/subnets/ruddy-subnet]

azurerm_network_interface.res-8: Refreshing state... [id=/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo/providers/Microsoft.Network/networkInterfaces/ruddy-catapp-nic]

azurerm_linux_virtual_machine.vm: Refreshing state... [id=/subscriptions/xxxx/resourceGroups/ruddy-aztfy-demo/providers/Microsoft.Compute/virtualMachines/ruddy-webapp-vm]

No changes. Your infrastructure matches the configuration.

Terraform has compared your real infrastructure against your configuration and found no differences, so no changes are needed.

╷

│ Warning: Argument is deprecated

│

│ with azurerm_subnet.res-7,

│ on main.tf line 102, in resource "azurerm_subnet" "res-7":

│ 102: address_prefix = "10.0.10.0/24"

│

│ Use the `address_prefixes` property instead.

│

│ (and one more similar warning elsewhere)

╵

kruddy@mbp02 aztfy_hashicat %

That looks like a successful test to me!

What’s Next?

We’ve seen how the aztfy tool can be used to read a resource group and automatically generate the Terraform configuration to continue managing them through Terraform. Are we done?

Honestly, no. We’ve jumped one of the biggest hurdles, which is defining the resources, but there’s still more work to be done. Composability and reusability are big parts of infrastructure as code and, right now, everything is defined in a very static way. This is also likely the cause behind all of the depends_on usage that I referred to earlier. At a minimum, the state is stored locally and should be stored remotely in a secure fashion. The new cloud block is an excellent way to push the generated state file to Terraform Cloud. Futher, I would go through and add variables so that this one particular set of resources could be used as a source for creating multiple sets of similar infrastucture. Getting more advanced, you’d want to refactor the code into a module, or set of modules, to further simplify the reuse of the generated configurations. For the existing resources, check out the moved block, which was added to Terraform 1.1 and greatly simplifies moving resources around and refactoring your configuration code.

Lastly, the example I showed was also very simple. I would expect most resource groups to have a little more than a single VM and the associated dependencies. This would require multiple runs of the aztfy tool in order to seperate out the Terraform configurations based on how your organization operates.